ASHRAE’s Technical Committee 9.9 (“TC9.9”) recently published the fifth edition of the Thermal Guidelines for Data Processing Environments. Widely recognized as the standard for identifying environmental conditions requirements associated with data centers, the guidelines published by TC9.9 are developed by collecting input from a diverse cross-section of contributors from both the IT equipment vendor community and the data center industry.

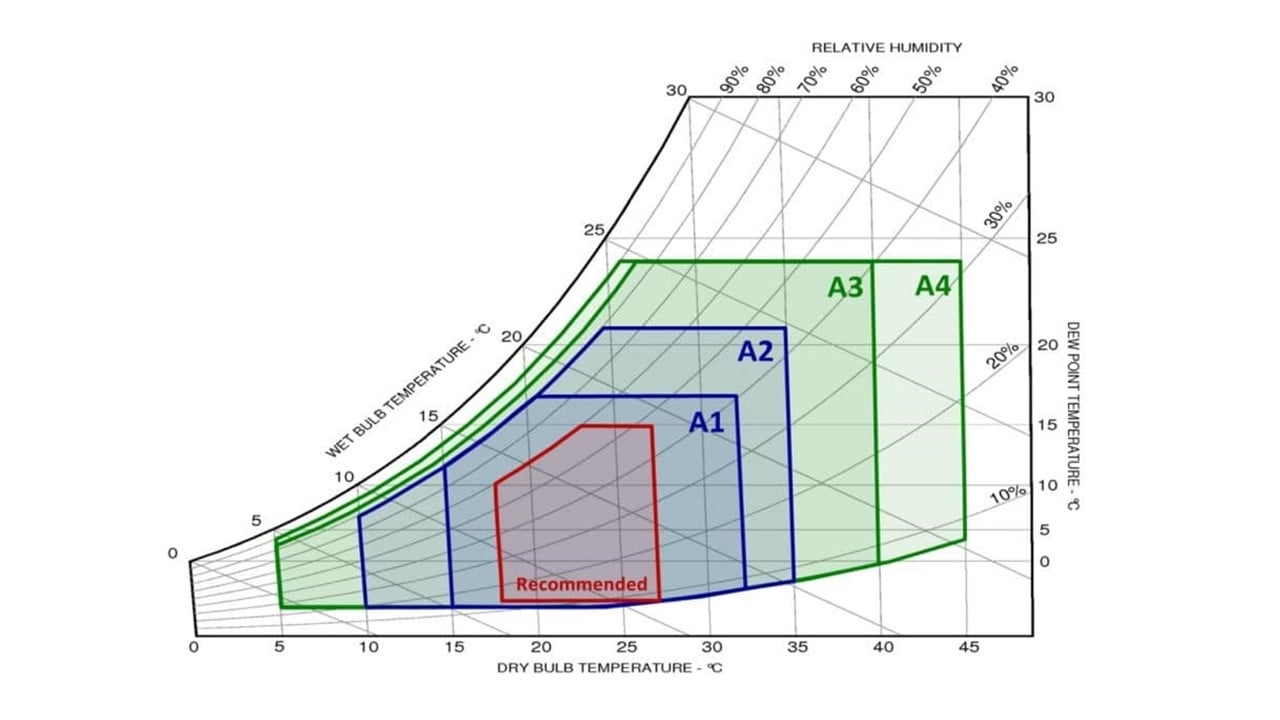

Historically, the updates to TC9.9 Thermal Guidelines have relaxed the operating envelope standards for data centers, enabling owners to leverage higher inlet temperatures in their data center and maximize energy efficiency as a result. For example, ASHRAE’s extension of the recommended operating range for class A1 equipment in 2011 extended the top end of the recommended operating dry bulb temperature of a data center to 80.6°F, with allowable ranges that stretched beyond that (note that the temperatures indicated are measured at the server inlet).

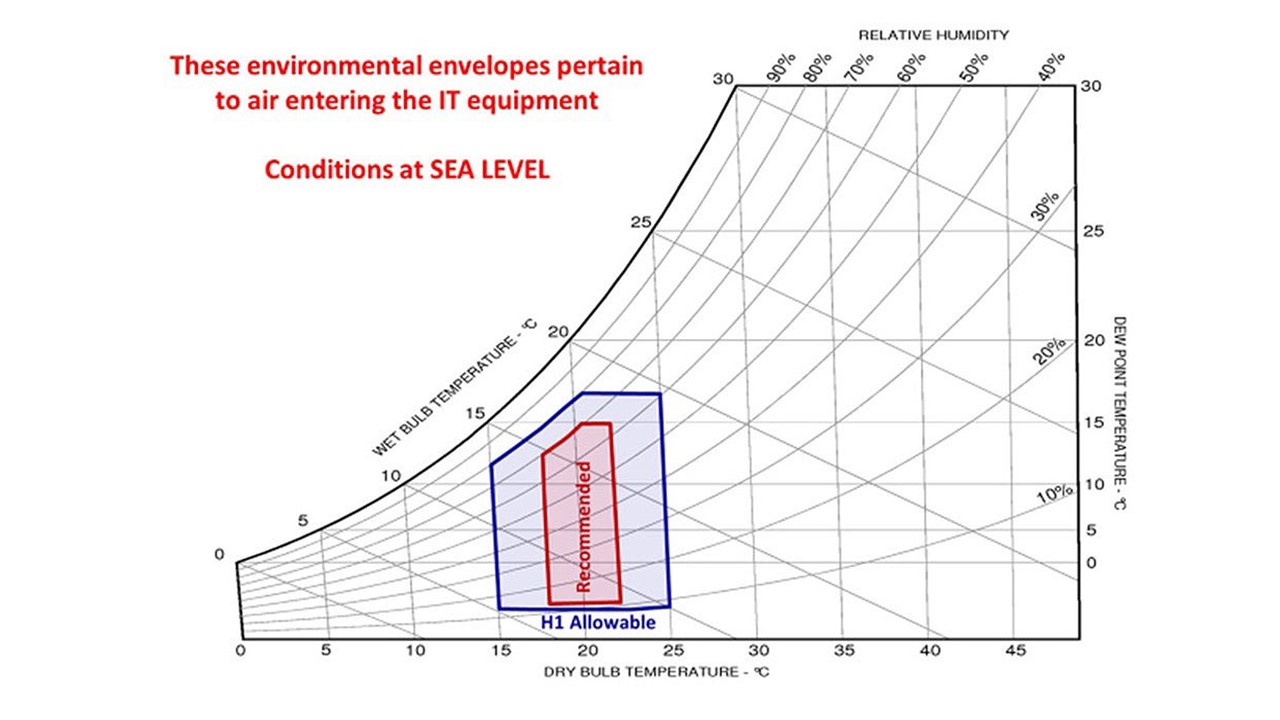

Somewhat surprisingly, in the recent fifth edition of the guidelines, ASHRAE introduced a new classification of equipment – H1 – and tightened the recommended operating envelope for this equipment as compared to class A1. The new H1 class includes systems that “tightly integrate a number of high-powered components” like server processors, accelerators, memory chips, and networking controllers. Based on the recommendations in the fifth edition, ASHRAE is indicating that these H1 systems need more narrow temperature bands when air-cooled strategies are used, recommended 64.4°F – 71.6°F (as compared to 64.4°F – 80.6°F for class A1. See image 2 below.

Many data center operators at research-based institutions already struggle with the heterogeneity of their data center – the high density nature of their research and high-performance computing (HPC) systems differs drastically from the profile of their enterprise computing systems. For any operators of HPC systems, this change in ASHRAE’s recommendations is important, as several factors will need to be considered:

- Defining H1 – ASHRAE does not define which specific equipment is type H1, nor do IT equipment manufacturers typically define equipment classes in their specifications. Owners and their outside resources will need to work closely with their equipment providers to identify if HPC equipment being procured and installed in the data center should be classified as H1 (and thus subject to a narrower operating temperature band) or A1.

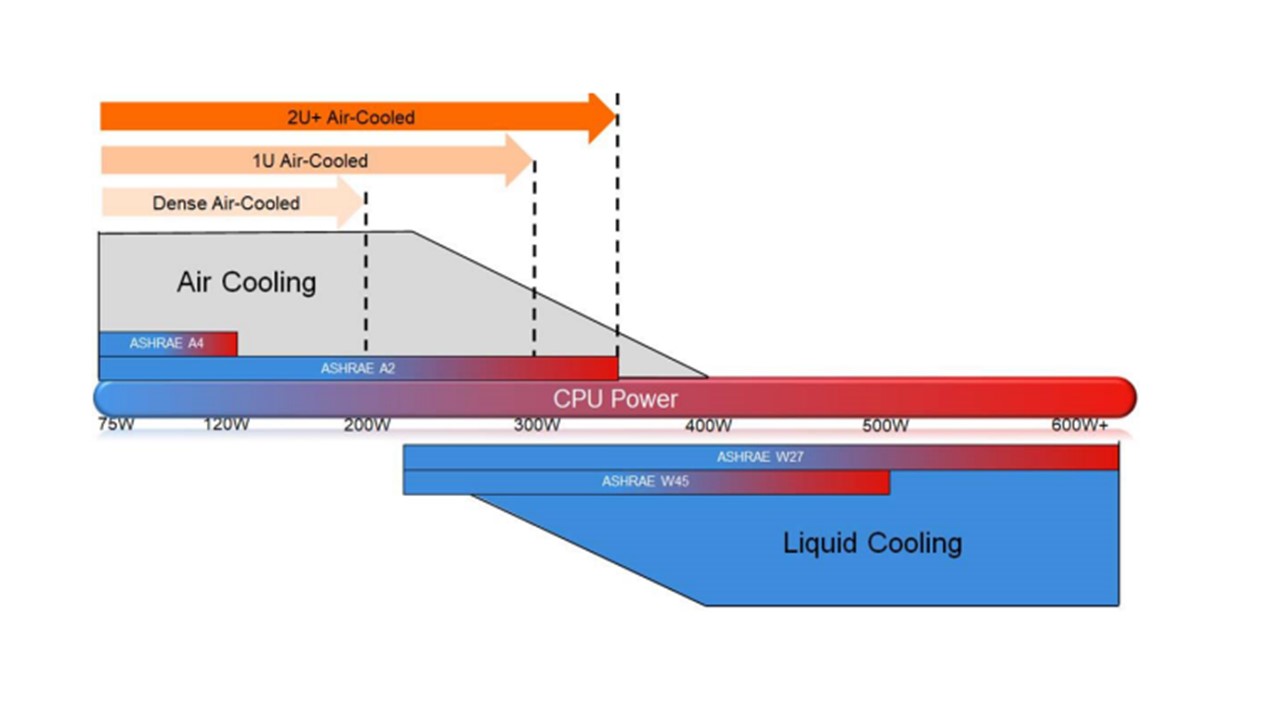

- Liquid Cooling – Given the narrowing band for air-cooled systems in the H1 class for HPC systems, we may see more prevalence in liquid-cooled equipment. While the majority of HPC equipment manufactured and installed in data centers today is air-cooled, the push to increase power density in smaller form factors may drive the utilization of liquid cooling. Initial data from ASHRAE shows that power requirements for 1U or 2U servers approaching 400W show difficulty in leveraging air-cooled methodologies. See image 3 below.

- Reassessing Current Data Centers – For research-based institutions, the process of procuring HPC systems tends to revolve around the grant acquisition process. As a result of a grant award, large HPC installations can be added to a data center without significant notice. Operators of data centers with research and high-performance computing systems need to evaluate their current data center and determine the capacity of their cooling infrastructure to support a diversity in inlet supply temperatures required by A1 and H1 equipment. This diversity presents a challenge: if you reduce the entire data center to fall within the H1 envelope, you are sacrificing energy efficiency in the process. However, for many legacy data centers, the ability to deliver different setpoints to different equipment may be challenging

For owners today that are utilizing high-performance computing, ASHRAE’s change to include the H1 classification is significant. Failure to operate within the recommended envelope for the appropriate equipment classification in your data center can lead to warranty issues and shortened equipment lifecycles. Owners should begin by evaluating their current data center, assessing whether they have the capacity to support H1 recommended temperatures (without changing inlet temperatures for the remainder of the data center), and developing a plan for supporting this narrower operating range in the near term.

Questions on high-performance computing? Email us at info@ledesigngroup.com.